What is it?

Data refers to the raw information collected from any observation or measurement. This can include personal data such as habits, preferences, and location; health, financial, business, environmental data and much more.

Algorithms, on the other hand, are sets of rules or instructions that are used to process data in order to detect patterns and predict outcomes.

Artificial Intelligence uses algorithms and large amounts of data to attempt to replicate the intelligence of biological creatures such as humans.

What can AI do?

What can AI not do?

What harms is it causing?

Harnessing algorithms & AI have allowed us to make huge advancements in fields like healthcare, science, industry, business and public decision-making. On social media, personalization has connected us to people and ideas like never before. AI in particular has the potential to transform every human activity from transportation to material production to knowledge work.

However, these technologies have already caused a lot of harm, and if not designed, implemented, and regulated appropriately, these harms could increase exponentially.

Surveillance & Data Appropriation

The first component is ubiquitous surveillance: liking, purchasing, location data, home devices, work surveillance, public and private cameras

Data Capitalism and Algorithmic Racism

Companies have relentlessly mined people for their faces, voices, and behaviors to enrich bottom lines… Mozilla’s Siminyu says it’s easy for individuals and communities to be disenfranchised; value is seen to come not from the people who give their data but from the ones who take it away. “They say, ‘Your voice isn’t worth anything on its own. It actually needs us, someone with a capacity to bring billions together, for each to be meaningful,’” she says.

A New Vision of Artificial Intelligence for the People

Exploitative Business Models

Data & Surveillance Capitalism: This refers to the business model in which human experience is captured to create prediction products which can then be sold, often to advertisers. This has led to a surge in polarization due to platforms’ incentives to keep users engaged as much as possible. Conversation is no longer as important as content, the more incendiary the better. As behaviors become digitized and legible, they are commodified leading to a brandification of identity.

Much of what we call big data and data science is using that data to manipulate us to buy more stuff.

Data as the New Soil, Not Oil

Whether it is the gig economy company that tinkers with its incentive algorithms—and sends pay plummeting for thousands of “independent contractors”… Under data capitalism, employers deploy data technology to intensify work, disempower workers, and dodge accountability for their workforce, while funneling the benefits of increased efficiency, lower costs, and higher profits to upper management and corporate shareholders, who are disproportionately white.”

Data Capitalism and Algorithmic Racism

Opacity and Unaccountability

Algorithms are opinions embedded into code.

Data Capitalism and Algorithmic Racism

The reduction of human life into mere data points, like today’s data flows in corporations and the use of datasets by CEOs and corporate boards, allows for people at the top of the hierarchy to be responsible for the harm they cause but never accountable to the people they have harmed.

Data Capitalism and Algorithmic Racism

AI — it provides a pseudo-scientific basis for ailing social institutions to reduce costs and direct limited resources “objectively” and in a way that is difficult to be challenged. As an increasing number of institutions adopt the ideology that the problems our society faces stem from individual failings and not systemic ones, AI allows them to construct systems that “objectively” identify these “problem” individuals, shielding from view the actual causes of social strain.

Resisting AI - A Resonant Critique

When public entities contract with private companies to harvest and aggregate data… they effectively circumvent Fourth Amendment protections meant to defend individuals and communities from police abuses, while shielding law enforcement from public accountability, especially when contracts between tech companies and law enforcement are secret.

Data Capitalism and Algorithmic Racism

A majority of “ethical AI” work does not question whether largely unaccountable, tech multinationals should be building tools that segregate and discriminate on such a massive scale in the first place, leaving us to focus on how to more accurately label and discriminate between individuals as a path towards social justice.

Resisting AI - A Resonant Critique

Racism, Discrimination & Exclusion

Models built by averaging data from entire populations have sidelined minority and marginalized communities even as they are disproportionately subjected to the technology’s impacts.

A New Vision of Artificial Intelligence for the People

*The firm peddling facial recognition technology that disproportionately misidentifies people of color as wanted criminals… or the social media giant that lets advertisers exclude Black homebuyers from seeing real estate ads in particular neighborhoods… Those identified as financially desperate receive ads for predatory loan products and for-profit colleges, while those identified as affluent are targeted for high-paying jobs and low-interest banking products. The result is a feedback loop where people with less wealth—far more likely to be Black and brown people—continue to be marginalized and further excluded from wealth building, even when advertisers never explicitly target ads by race.

Data Capitalism and Algorithmic Racism

Data is the last frontier of colonization… Global AI development is impoverishing communities and countries that don’t have a say in its development—the same communities and countries already impoverished by former colonial empires.

A New Vision of Artificial Intelligence for the People

Chattel slavery was the first-use case for big data systems to control, surveil, and enact violence in order to ensure global power and profit structures. Data on enslaved people and data on the business operations of plantations and slave traders flowed up and down hierarchies of management and ownership.

Data Capitalism and Algorithmic Racism

Negative Environmental Impact

*The tech industry’s carbon footprint could increase to 14% by 2040, accounting for more than half of the current relative contribution of the whole transportation sector” and more than the current relative contribution of the US.

Training this one AI model produced 300,000 kilograms of carbon dioxide emissions (Strubell et al., 2019). That’s roughly the equivalent of 125 round trip flights from NYC to Beijing.

Big AI companies are aggressively marketing their (carbon intensive) AI services to oil & gas companies, offering to help optimize and accelerate oil production and resource extraction.*

AI and Climate Change

What opportunities are there for regeneration?

Data Commons & Stewardship

Efforts to manage data for privacy like HIPAA and GDPR are complicated, difficult, bureaucratic, not commons-enhancing.

Data as the New Soil, Not Oil

Evgeny Morozov and Francesca Bria suggest that cities and their residents should treat data as a public meta-utility, and should “appropriate and run collective data on people, the environment, connected objects, public transport, and energy systems as commons… in support of autonomous self-governance.

Data Capitalism and Algorithmic Racism

(A project revitalizing Maori language through AI) created a data license that spells out the ground rules for future collaborations based on the Māori principle of kaitiakitanga, or guardianship. It will only grant data access to organizations that agree to respect Māori values, stay within the bounds of consent, and pass on any benefits derived from its use back to the Māori people. There remain questions around its enforceability.

A New Vision of Artificial Intelligence for the People

Communal Governance

People should be able to exert democratic collective bargaining power over their data, in order to make joint decisions controlling its use, and negotiate appropriate compensation. Data Dignity

Workers at companies like Uber, TaskRabbit, and Instacart should be able to form worker-owned co-ops to provide staffing services to gig companies. As envisioned by the California Cooperative Economy Act, these staffing cooperatives would be collectively owned and democratically controlled by workers, with the power to negotiate terms of work with platform companies.

Data Capitalism and Algorithmic Racism

Decentralization & Interoperability

These platforms, especially aggregators, are incentivized to resist decentralization and interoperability. After all, ‘data is the new oil’. These services almost entirely depend on making sure that only they have access to that valuable data. Interoperability, on the other hand, means you no longer have a data moat, or a privileged hub position in the network. Decentralization is about agency: we get choice about where we store our data, who we give access to which parts of that data, which services we want on top of it, and how we pay for those. Much like how the Net Neutrality debate strives to maintain the separation of the content and connectivity markets, data neutrality strives to maintain the separation of data and application markets.

Towards Data Neutrality

Algorithmic Transparency

*Demand that all algorithms be fully explainable. Tech companies object that this would slow down the pace of AI innovation until explainable AI is further developed, but this tradeoff is well worth it.

Require algorithms used for public purposes—for example those used by cities, states, counties, and public agencies to make decisions about housing, policing, or public benefits—to be open, transparent, and subject to public debate and democratic decision-making.

Require audits of private algorithms deployed in a commercial setting, enabling public evaluation.

Require audit logs of the data fed into automated systems—if the algorithms themselves are too complex to understand, logs of data fed into them can still be analyzed.*

Data Capitalism and Algorithmic Racism

Data Health

Unhealthy data is data you can’t trust. So one of the aspects of healthy data is the reliability of a datum right? We don’t focus on that much right now. If we actually thought of a data layer as healthy soil, what are all the positive things that would lead to the goal of preventing damage? Data as the New Soil, Not Oil

Dive Deeper

Questions to Explore:

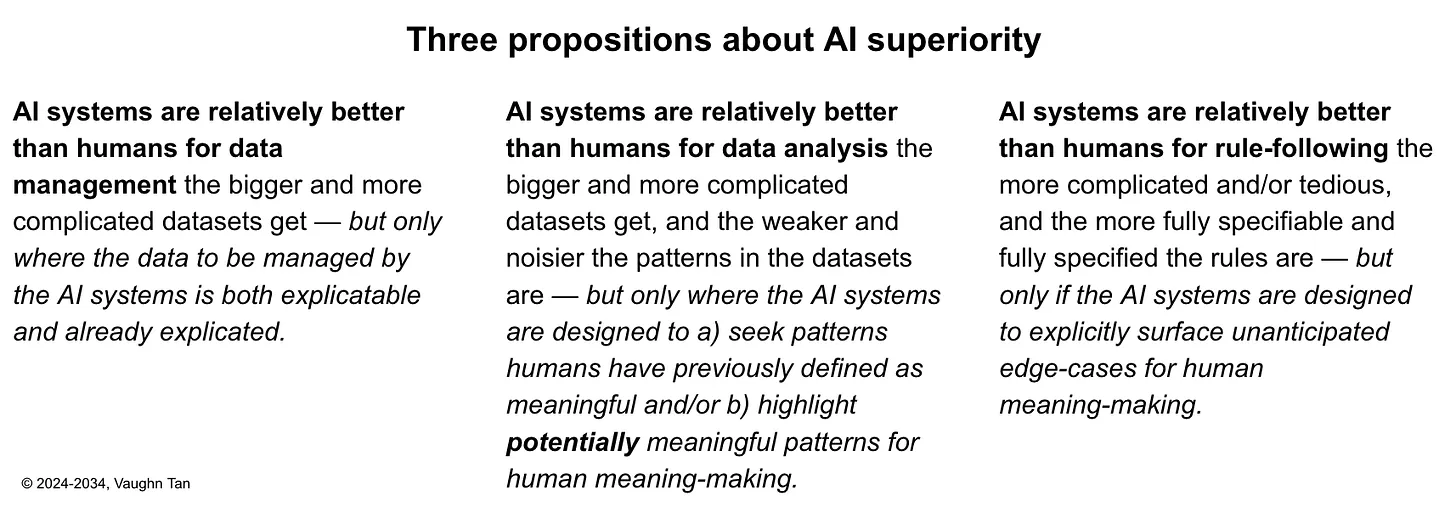

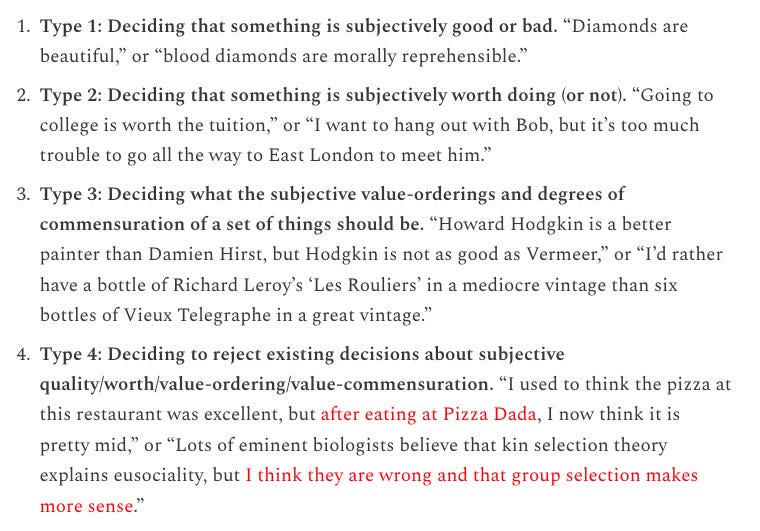

What meaning-making work are we giving to AI, particularly in the context of governance? In AI models and products that claim to incorporate wisdom, how are they handling meaning-making work?

Topic relates to:

Data & Surveillance Capitalism

Cooperatives & Community Exits

Further Reading:

Indigenous AI Data Capitalism and Algorithmic Racism Resisting AI - A Resonant Critique Ways of Being - Animals, Plants, Machines Towards Data Neutrality